Important: since it is quite technical, some text is mainly

copy-pasted from the

thesis (see

References). For more compact information you can look at the

Neural Computation article.

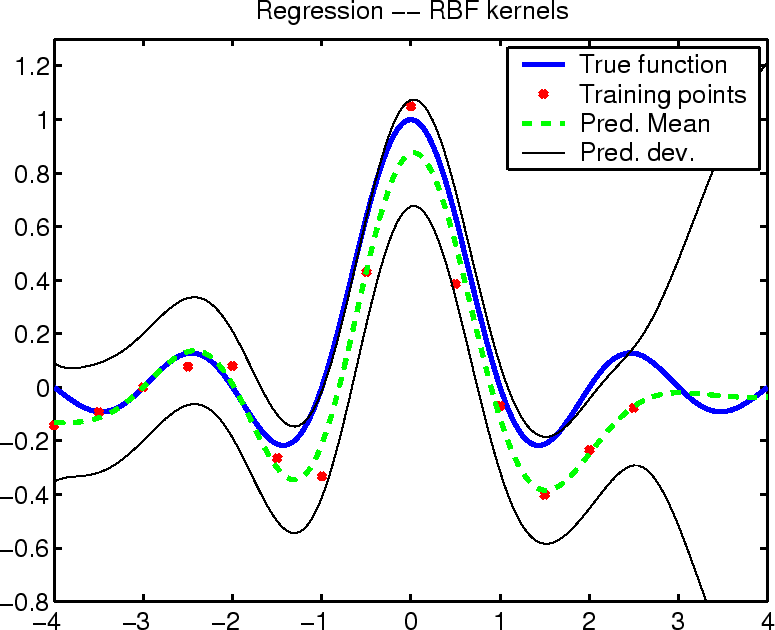

Regression with additive Gaussian noise

A trivial example of the above procedure is regression with

additive Gaussian noise assumption. The image below shows the

resulting mean of the posterior process together with the true function

and the predicted variance around the mean.

Let us assume that the additive Gaussian noise has variance

.

At

time

t + 1 we need the marginal of GP

(

t

t,

Ct)

taken at the new data point

xt + 1. This marginal is a normal distribution with

mean

mt + 1 =

kt + 1T t

t and covariance

=

k* +

kt +

1TCtkt + 1.

The coefficients

q(t + 1) and

r(t +

1) are :

Exact computation, as it has been mentioned is only possible for

the case above. The online approximation is be employed for non-standard

regression where the noise is additive but the noise distribution is

non-Gaussian.

For this exact case of regression with Gaussian noise one can show

that the iterative online update is actually the iterative matrix

inversion formula

(presented in detail in Appendix

C of the

thesis).

Questions, comments, suggestions: contact Lehel

Csató.